Summary from the Stanford's Machine learning class by Andrew Ng

- Part 1

- Supervised vs. Unsupervised learning, Linear Regression, Logistic Regression, Gradient Descent

- Part 2

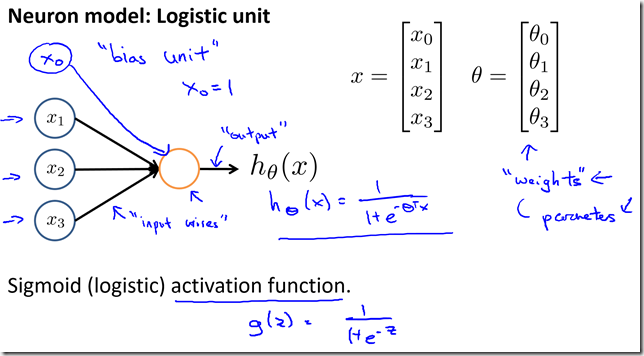

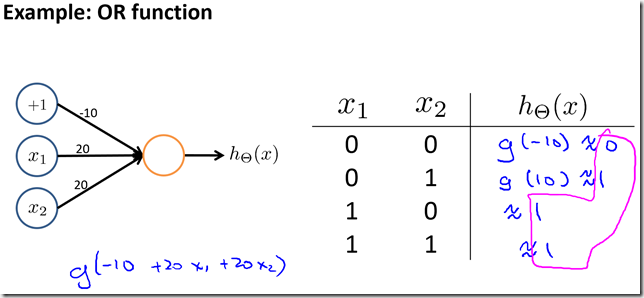

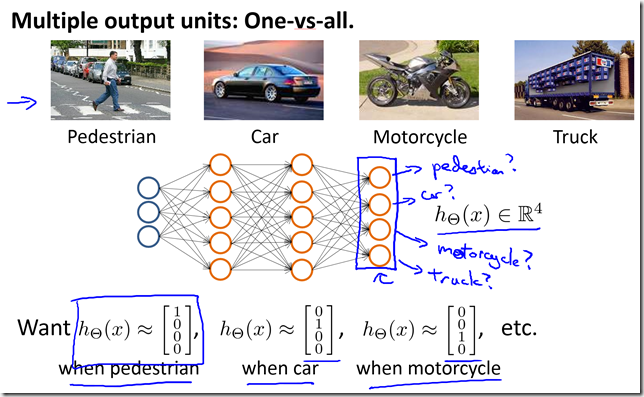

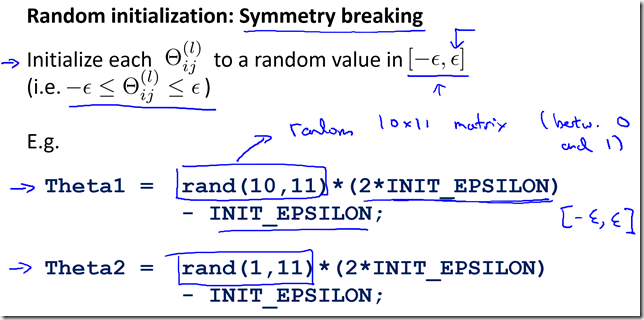

- Regularization, Neural Networks

- Part 3

- Debugging and Diagnostic, Machine Learning System Design

- Part 4

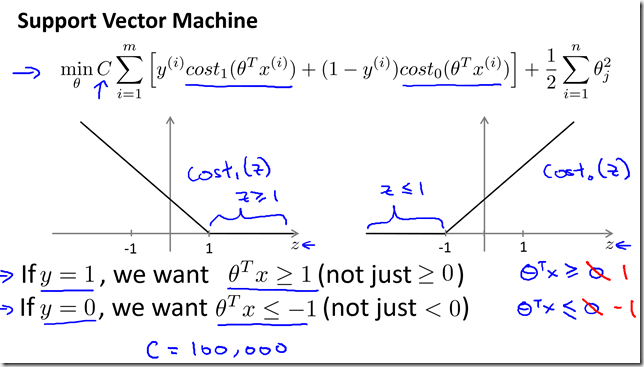

- Support Vector Machine, Kernels

- Part 5

- K-means algorithm, Principal Component Analysis (PCA) algorithm

- Part 6

- Anomaly detection, Multivariate Gaussian distribution

- Part 7

- Recommender Systems, Collaborative filtering algorithm, Mean normalization

- Part 8

- Stochastic gradient descent, Mini batch gradient descent, Map-reduce and data parallelism

Anomaly detection

- Examples

- Fraud Detection

- x(i) = features of users i activities.

- Model p(x) from data

- Identify unusual users by checking which have p(x) < epsilon

- Manufacturing

- Monitoring computers in a data center

- Fraud Detection

- Algorithm

- Choose features x(i) that you think might be indicative of anomalous examples.

- Fit parameters u1,…un, sigma square 1,… sigma square n

- Given new example x, compute p(x):

- Anomaly if p(x) < epsilon

- Aircraft engines example

- 10000 good (normal) engines

- 20 flawed engines (anomalous)

- Alternative 1

- Training set: 6000 good engines

- CV: 2000 good engines (y=0), 10 anomalous (y=1)

- Test: 2000 good engines (y=0), 10 anomalous (y=1)

- Alternative 2:

- Training set: 6000 good engines

- CV: 4000 good engines (y=0), 10 anomalous (y=1)

- Test: 4000 good engines (y=0), 10 anomalous (y=1)

- Algorithm Evaluation

- Anomaly detection vs. Supervised learning

- Very small number of positive examples

- Large number of positive and negative examples.

- Large number of negative examples

- Enough positive examples for algorithm to get a sense of what positive examples are like, future positive examples likely to be similar to ones in training set.

- Many different “types” of anomalies. Hard for any algorithm to learn from positive examples what the anomalies look like; future anomalies may look nothing like any of the anomalous examples we’ve seen so far.

- Fraud detection

- Email spam classification

- Manufacturing (e.g. aircraft engines)

- Weather prediction (sunny/rainy/etc).

- Monitoring machines in a data center

- Cancer classification

Anomaly detection

Supervised learning

- Choose what features to use

- Plot a histogram and see the data

- Original vs. Multivariate Gaussian model