Summary from the Stanford's Machine learning class by Andrew Ng

- Part 1

- Supervised vs. Unsupervised learning, Linear Regression, Logistic Regression, Gradient Descent

- Part 2

- Regularization, Neural Networks

- Part 3

- Debugging and Diagnostic, Machine Learning System Design

- Part 4

- Support Vector Machine, Kernels

- Part 5

- K-means algorithm, Principal Component Analysis (PCA) algorithm

- Part 6

- Anomaly detection, Multivariate Gaussian distribution

- Part 7

- Recommender Systems, Collaborative filtering algorithm, Mean normalization

- Part 8

- Stochastic gradient descent, Mini batch gradient descent, Map-reduce and data parallelism

Introduction

- “algorithms for inferring unknowns from knowns”

- Arthur Samuel was the father of ML. Created first self-learning program to play checkers.

- “A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E” – Tom Mitchell

- Use for Data mining (e.g. web click data, medical records, biology) and applications which can’t be programmed by hand (e.g. autonomous helicopter, handwriting recognition, natural language processing, computer vision…)

- Supervised Learning

- Where right answers are given.

- Regression – Predict continuous output (e.g. house price)

- Classification – Discrete valued output 0 or 1 (e.g. breast cancer, malignant or benign)

- Features – E.g. Size of house, No of bedrooms in house, Clump thickness, uniformity of cell size.

- Unsupervised Learning

- Need to cluster the data

- Possible applications:

- Social network analysis

- Market segmentation

- Astronomical data analysis

- Gene classification

- Organize computing clusters in data center.

- Cocktail Party problem – Separate out the noise from 2 voices

Supervised Learning

Training Set -> Learning Algorithm -> [ X -> Hypothesis -> Estimated Value (Y) ]

Linear Regression

- Liner Regression with One Variable or Univariate Liner Regression

- Gradient Descent Algorithm

- If α is too small, gradient descent can be slow.

- If α is too large, gradient descent can overshoot the minimum. It may fail to converge, or even diverge.

- There can be multiple “Local Optima” but only one “Global optima”

- For liner regression the cost function is “bowl” shape so it only have global function.

- Linear Algebra (101)

- Matrices and Vectors

- Addition + Scalar multiplication

- Matrix-vector multiplication

- Matrix-Matrix multiplication

- Properties: Commutative, Associative, Identity Matrix

- Inverse and Transpose

- Linear Regression with Multiple Features

- Gradient Descent for multiple variables

- Feature Scaling

- Get very feature into approximately a –1 <= x <= 1 range. -3 to 3 is also ok but more than that is not good.

- Use “Mean Normalization”. ( x – u / s ) where u is the average value of x in training set and s is the max, min or standard deviation of the x range)

- Learning rate

- If α is too small: slow convergence.

- If α is too large: may not decrease on every iteration; may not converge.

- To choose α, try 0.001, 0.01, 0.1, 1....

- Features and Polynomial regression

- Features can be calculated from other features (e.g. frontage * depth = new feature for house)

- Normal Equation

- Method to solve for theta analytically

Logistic Regression

- Classification

- Examples: Email (spam/ no spam), online transactions fraudulent (yes,no), Tumor (malignant, benign)

- Hypothesis can be either 0 or 1

- Use Sigmoid function/Logistic Function

- Decision Boundary

- It is the property of the “hypothesis” and not the dataset

- Linear and Non-Linear boundaries.

- With much higher polynomial it is possible to show much complex Non-Linear decision boundaries.

- Cost Function

- How to choose parameter theta ?

- Gradient Descent

- Advanced Optimizations

- Algorithms : Conjugate gradient, BFGS, L-BFGS

- Advantages : No need to manually pick “learning rate alpha”, often faster than gradient descent

- Disadvantages: more complex

- Multi-class Classification: One-vs-all

Regularization

- Problem of Overfitting

- Options to address overfitting:

- Reduce number of features

- Manually select which features to keep

- Model selection algorithm (later in course)

- Regularization

- Keep all features but reduce magnitude/values of parameters theta.

- works well when we have lots of features, each of which contributes a bit to predicting y.

- Reduce number of features

- Too much regularization can “underfit” the training set and this can lead to worse performance even for examples not in the training set.

- Cost Function

- if lamda is too large (e.g. 1010) than the algorithm results in “underfitting”(fails to fit even training set)

- Regularization with Liner Regression

- Normal Equation

- Regularization with Logistic Regression

Neural Networks

-

Introduction

-

Algorithms that try to mimic the brain. Was very widely used in 80s and early 90s; popularity diminished in late 90s.

-

Send a signal to any brain sensor and it will learn to deal with it. E.g. Auditory cortex learns to see, Somatosensory cortex learn to see.

Seeing with your tongue, human echolocation, third eye for frog.

-

- Model Representation

- Forward propagation

- Non-linear classification example: XOR/XNOR

- Non-linear classification example: AND

- Non-linear classification example: OR

- XNOR

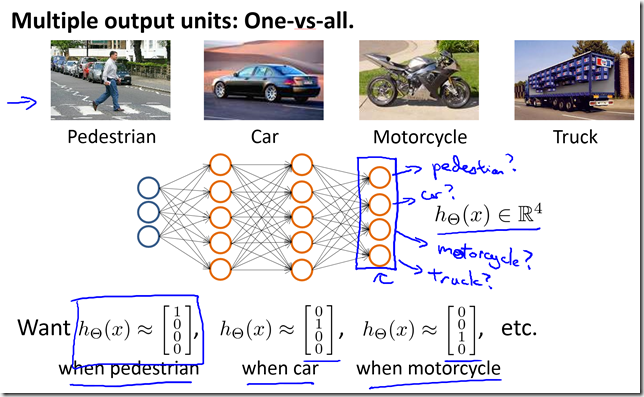

- Multi-class classification

- Cost Function

- Unlike logistic regression we DO NOT sum the value of “Bias Unit” in the regularization term for cost of neural networks.

- Just as logistic regression a large value of “lamda” will penalize large parameter values, thereby, reducing the changes of overfitting the training set.

- Backpropagation algorithm

- Unrolling parameters

- Gradient Checking

- There may be bugs in forward/back propagation algorithms even if the cost function looks correct.

- Gradient checking helps identify these bugs.

- Implementation Note:

- Implement backprop to compute DVec (unrolled ).

- Implement numerical gradient check to compute gradApprox.

- Make sure they give similar values.

- Turn off gradient checking. Using backprop code for learning

- Be sure to disable your gradient checking code before training your classifier. If you run numerical gradient computation on

every iteration of gradient descent (or in the inner loop of costFunction(…))your code will be very slow.

- Random initialization

- Initializing theta to 0 works for logistic regression but it does not work for neural network.

- If we initialize theta to 0 than for neural network, after each update, parameters corresponding to inputs going to each of two hidden units are identical.

- This causes the “Problem of Symmetric Weight”

- To solve this issue randomly initialize the theta values.

- Training a neural network

No comments:

Post a Comment